When setting up a coding environment, one of the most common questions developers ask is, “Is a graphics card needed for coding?” Graphics cards are known for powering high-performance tasks in gaming and graphic design, but is it a necessity for programming? Let’s dive into what coding actually requires, when a graphics card might be beneficial, and how to choose the right setup based on your coding goals.

What Is a Graphics Card and Why Do We Use It?

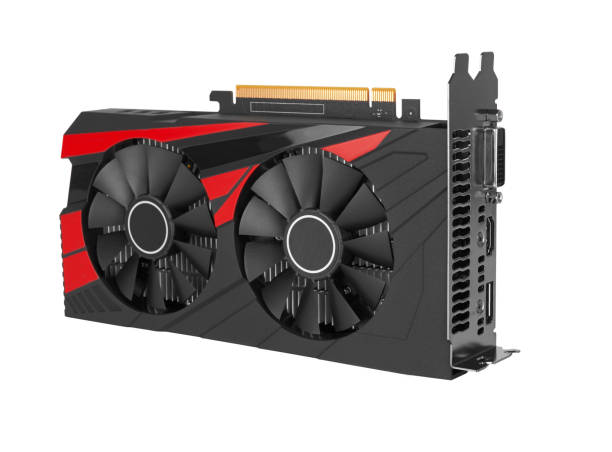

A graphics card, also known as a GPU (Graphics Processing Unit), is responsible for rendering images, animations, and videos on your screen. Most people associate graphics cards with gaming, 3D rendering, and other visually intensive tasks. But for many coders, understanding the role of a graphics card and whether it’s needed—is critical.

There are two main types of graphics cards:

- Integrated Graphics Cards: These are built into the CPU (Central Processing Unit). They are energy-efficient, budget-friendly, and suitable for general-purpose computing, like web browsing, word processing, and light coding tasks.

- Dedicated Graphics Cards: These are standalone units designed specifically for processing visual information. They have their own memory (VRAM), allowing for higher performance, and are often preferred for gaming, video editing, 3D modeling, and other graphically demanding tasks.

So, where does coding fit in? Let’s take a closer look.

Types of Coding and How Hardware Affects Each

Not all coding is the same. Some coding tasks involve handling simple web design, while others may focus on complex 3D modeling or machine learning algorithms. The type of coding you do largely determines if you’ll benefit from a graphics card.

1. Basic Coding (Web Development, Scripting)

Most web development tasks, including HTML, CSS, and JavaScript, don’t require a graphics card. Similarly, scripting languages like Python or Ruby, used for backend development, don’t rely on graphical processing. Here’s why:

- Processing Power: Web development and scripting languages rely more on CPU performance than on GPU.

- Memory Needs: Generally, 8-16 GB of RAM is more than sufficient for smooth performance in basic coding tasks.

For these kinds of coding, integrated graphics will usually be enough, and the extra investment in a dedicated graphics card is unnecessary.

2. App and Software Development

Building applications, especially if you’re using Visual Studio or Xcode, still doesn’t demand a high-performance graphics card. The CPU and RAM are the primary factors here, not the GPU. However, having a decent GPU can enhance your experience if you work with emulators or simulators that display UI elements or animations.

For most app development tasks, integrated graphics will handle the job, but developers working on apps that need graphical simulations or visualizations may see a minor performance boost with a dedicated GPU.

3. Game Development and Graphics-Intensive Coding

This is where things change. Game development and other graphics-intensive projects like VR (Virtual Reality) and AR (Augmented Reality) require powerful visuals, which makes a dedicated graphics card almost essential. Game engines like Unity and Unreal Engine rely on GPUs to handle complex textures, lighting, shadows, and other graphical elements.

For game developers, having a good graphics card is crucial for:

- Real-time Rendering: Helps visualize the final look of the game during the development phase.

- Smooth Workflow: Minimizes lags and allows for quicker testing and debugging.

- Enhanced Productivity: A dedicated graphics card can speed up workflows, making the development process smoother and more efficient.

In short, if you’re pursuing game development or work with graphically intensive projects, investing in a high-performance graphics card is highly recommended.

4. Machine Learning and Data Science

When it comes to machine learning and data science, the demand for a GPU depends on the complexity of the tasks you’re handling. Graphics cards are very efficient at parallel processing, which is advantageous for training machine learning models or working with large datasets.

For data scientists and machine learning engineers, a powerful graphics card can provide:

- Faster Model Training: GPUs can process large volumes of data quickly, making them ideal for deep learning models.

- Enhanced Computational Power: Speeds up neural network training, making it possible to handle data-heavy tasks that would otherwise slow down on a CPU.

In these cases, opting for a high-performance GPU is ideal. Common choices include NVIDIA’s RTX and GTX series, which are popular for their CUDA cores that accelerate machine learning tasks.

Why You Might Not Need a Graphics Card for Most Coding Tasks

For many programmers, a graphics card isn’t necessary for day-to-day tasks. Here’s why:

- Coding Relies on CPU and RAM: A strong CPU and ample RAM are the core components for coding, allowing for smooth multitasking, compiling code, and running applications.

- Integrated Graphics are Sufficient: Integrated GPUs, found in most CPUs, handle everyday coding tasks effectively. Unless you’re working on visually demanding projects, integrated graphics provide a cost-effective solution.

- Cloud-Based Options: If your project does require more graphical processing power, cloud services like AWS, Google Cloud, or Microsoft Azure offer virtual machines with high-end GPUs for rent. This allows you to perform graphics-intensive tasks without needing to buy expensive hardware.

When a Graphics Card Can Improve Coding Efficiency

While not essential, a graphics card can improve the coding experience in specific cases, such as:

- Running Multiple Monitors: If you use a multi-monitor setup, a dedicated GPU can handle the additional screens with better performance.

- Video Editing for Coding Projects: If your coding involves video editing, such as creating tutorials or presentations, a GPU can reduce rendering times.

- Graphical Simulations: In fields like data visualization or scientific research, coding sometimes involves graphical simulations where a GPU can accelerate the process.

Recommended Graphics Cards for Coders (If Needed)

If you’ve decided a graphics card is necessary for your work, here are some options to consider based on different coding needs:

- Entry-Level Options:

- NVIDIA GTX 1650: Suitable for lightweight tasks, basic game development, and handling dual monitors.

- AMD Radeon RX 550: An affordable option that can handle 3D rendering on a smaller scale.

- Mid-Range Options:

- NVIDIA GTX 1660: A solid choice for game development and light machine learning tasks.

- AMD Radeon RX 580: Great for moderate graphical demands and suitable for most VR and AR projects.

- High-End Options:

- NVIDIA RTX 3080: Ideal for machine learning, game development, and handling complex graphical simulations.

- AMD Radeon RX 6900 XT: Comparable to the RTX 3080 and a good choice for machine learning and data-intensive coding.

These options give you a range of choices depending on your needs and budget.

Conclusion

Do You Really Need a Graphics Card for Coding?

In most cases, a graphics card is not essential for coding. For web development, scripting, and general software development, a powerful CPU and sufficient RAM are the primary needs. However, in specialized fields like game development, machine learning, and data science, a dedicated GPU can make a significant difference.

The answer to “is a graphics card needed for coding” ultimately depends on the type of work you do. If you’re working on graphically intensive tasks, a graphics card is a worthwhile investment. But for most coding tasks, integrated graphics should be more than sufficient.

[…] needs and budget. As of my opinion, NVIDIA’s GeForce RTX 30 series cards, such as the RTX 3080, RTX 3070, and RTX 3060, offer top-tier performance for gaming and content creation, with advanced features like ray […]